Video

Abstract

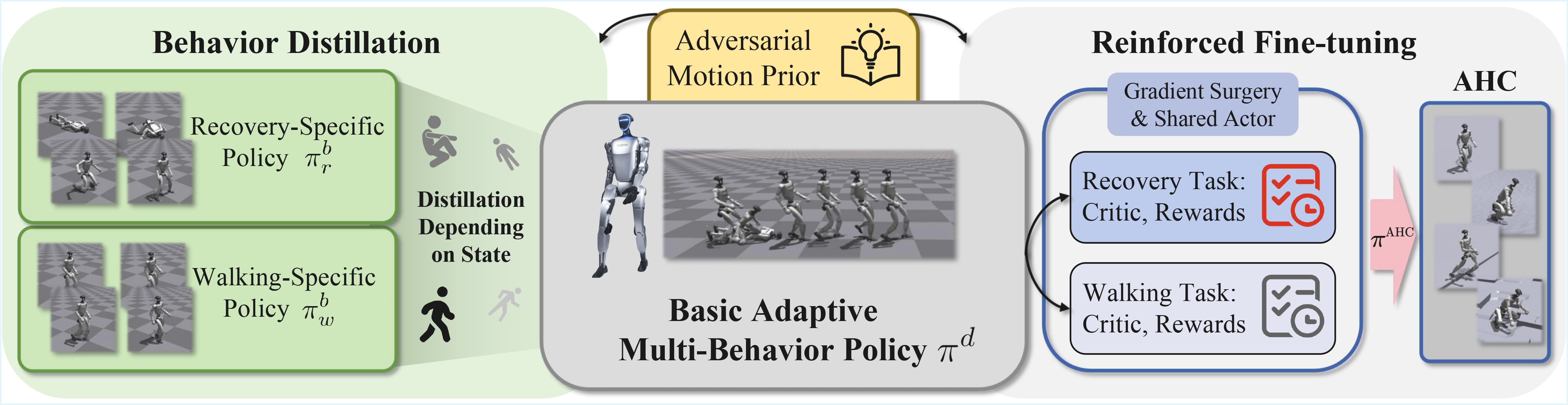

Humanoid robots are promising to learn a diverse set of human-like locomotion behaviors, including standing up, walking, running, and jumping. However, existing methods predominantly require training independent policies for each skill, yielding behavior-specific controllers that exhibit limited generalization and brittle performance when deployed on irregular terrains and in diverse situations. To address this challenge, we propose Adaptive Humanoid Control (AHC) that adopts a two-stage framework to learn an adaptive humanoid locomotion controller across different skills and terrains. Specifically, we first train several primary locomotion policies and perform a multi-behavior distillation process to obtain a basic multi-behavior controller, facilitating adaptive behavior switching based on the environment. Then, we perform reinforced fine-tuning by collecting online feedback in performing adaptive behaviors on more diverse terrains, enhancing terrain adaptability for the controller. We conduct experiments in both simulation and real-world experiments in Unitree G1 robots. The results show that our method exhibits strong adaptability across various situations and terrains.

Method

BibTeX

@inproceedings{zhao2025adaptivehumanoidcontrolmultibehavior,

title={Towards Adaptive Humanoid Control via Multi-Behavior Distillation and Reinforced Fine-Tuning},

author={Yingnan Zhao and Xinmiao Wang and Dewei Wang and Xinzhe Liu and Dan Lu and Qilong Han and Peng Liu and Chenjia Bai},

booktitle={Proceedings of the AAAI Conference on Artificial Intelligence (AAAI)},

year={2026}

}